All of the subsequent Shannon information-theoretic quantities we consider may be written as sums and differences of the aforementioned marginal and joint entropies, and all may be extended to multivariate ( X, Y, etc.) and/or continuous variables. The basic information-theoretic quantities: entropy, joint entropy, conditional entropy, mutual information (MI), conditional mutual information (; ), and multi-information (); are discussed in detail in Section S.1.1 in Supplementary Material, and summarized here in Table. All of these measures are non-negative.

Also, we may write down pointwise or local information-theoretic measures, which characterize the information attributed with specific measurements x, y, and z of variables X, Y, and Z (), rather than the traditional expected or average information measures associated with these variables introduced above. Full details are provided in Section S.1.3 in Supplementary Material, and the local form for all of our basic measures is shown here in Table. For example, the Shannon information content or local entropy of an outcome x of measurement of the variable X is (; ). The goal of the framework is to decompose the information in the next observation X n +1 of process X in terms of these information sources. The transfer entropy, arguably the most important measure in the toolkit, has become a very popular tool in complex systems in general, e.g., (;,;;;; ), and in computational neuroscience, in particular, e.g., (;;;; ). For multivariate Gaussians, the TE is equivalent (up to a factor of 2) to the Granger causality (). Extension of the TE to arbitrary source-destination lags is described by and incorporated in Table (this is not shown for conditional TE here for simplicity, but is handled in JIDT).

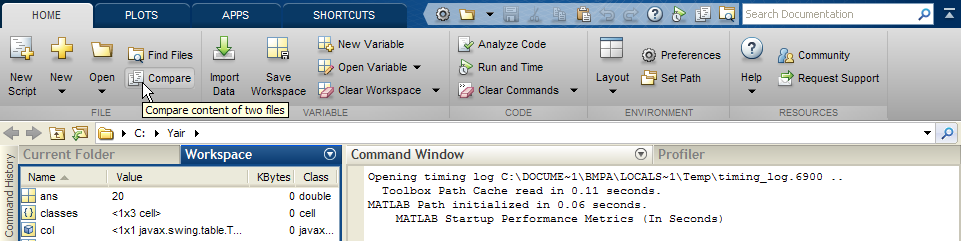

We introduce the Java Information Dynamics Toolkit (JIDT): a Google code project. How they unfold in space and time, as information dynamics (Lizier, 2013; Lizier et al., 2014). (2014a,b) (CC-BY license) provides MATLAB code for TE estimation. Kartinki na avu vkontakte full. Ensure that infodynamics.jar is on the Java classpath when your code.

Further, one can consider multivariate sources Y, in which case we refer to the measure T Y → X( k, l) as a collective transfer entropy (). See further description of this measure at Section S.1.2 in Supplementary Material, including regarding how to set the history length k. Table also shows the local variants of each of the above measures of information dynamics (presented in full in Section S.1.3 in Supplementary Material). The use of these local variants is particularly important here because they provide a direct, model-free mechanism to analyze the dynamics of how information processing unfolds in time in complex systems. Figure indicates, for example, a local active information storage measurement for time-series process X, and a local transfer entropy measurement from process Y to X.

Finally, in Section S.1.5 in Supplementary Material, we describe how one can evaluate whether an MI, conditional MI, or TE is statistically different from 0, and therefore, represents sufficient evidence for a (directed) relationship between the variables. This is done (following (;;;;;; )) via permutation testing to construct appropriate surrogate populations of time-series and measurements under the null hypothesis of no directed relationship between the given variables. Estimation Techniques While the mathematical formulation of the quantities in Section 2.1 are relatively straightforward, empirically estimating them in practice from a finite number N of samples of time-series data can be a complex process, and is dependent on the type of data you have and its properties. Estimators are typically subject to bias and variance due to finite sample size. Here, we briefly introduce the various types of estimators that are included in JIDT, referring the reader to Section S.2 in Supplementary Material (and also () for the transfer entropy, in particular) for more detailed discussion. For discrete variables X, Y, Z, etc., the definitions in Section 2.1 may be used directly by counting the matching configurations in the available data to obtain the relevant plug-in probability estimates (e.g., p ^ ( x y ) and p ^ ( x ) for MI).